NeRF (Neural Radiance Field)

Motivation

Neural Radiance Field (NeRF) is one of the interesting topics, which is kinds of extension of SIREN (Sinusoidal Representation Networks). NeRF is a method for representing 3D scenes using neural networks, specifically designed for novel view synthesis. Before this paper, there were Soft3D, Multiplane Image Methods (Multi-Layer, if views are different=>Orthogonal, it can’t be rendered), Neural Volumes (Memory Consumption issue <=> Resolution Issues), and many more. Most of them uses explicit 3D representation, such as voxel grids or points cloud. As you may know voxel representation can (1) leads to discretization artifacts or degrades view-consistency and (2) large memory consumption. But NeRF uses Implicit feature representation (Ligher than voxel representation), and continuous volumetric scene function. In detail or result, neural Radiance Field (NeRF) encodes a continuous volume within the deep neural networks, whose input is a single 5D Coordinate (spatial location (x, y, z) and viewing direction (θ, φ)) and output is the volume density and view-dependent emitted radiance(RGB Color) at that spatial location.

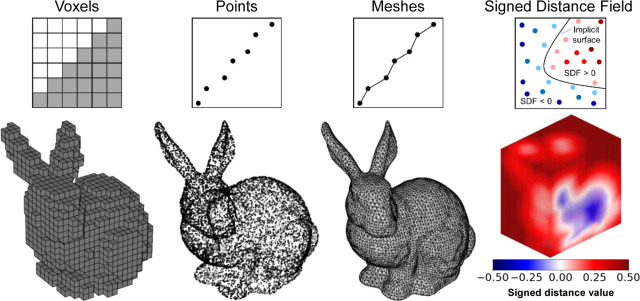

The image shown below is the overview of Explicit Representation vs Implicit Representation. As you can see, Explicit Representation uses Voxel Grid, Mesh, and Point Cloud, but Implicit Representation uses Signed Distance Function & Fields (SDF).

Background: Neural Fields(Coordinate-Based Neural Networks), Periodicity, Learning to Map

In order to understand NeRF, we need to understand the “Periodicity” and “Neural Fields” that underpin modern neural rendering techniques.

Neural Fields represent signals as continuous functions parameterized by neural networks. Rather than storing data in discrete grids or voxels, neural fields map input coordinates directly to output values - whether that’s color, density, signed distance, or any other signal. This idea shift allows us to represent complex 3D scenes implicitly through learned function approximations. For example, given an image, we train model with function that maps f_theta(x, y) -> RGB at position (100.4, 200.7) in the image, and compute this in neural network by inputing (x, y) coordinates. This makes us to query the scene at specific coordinates supporting different resolution.

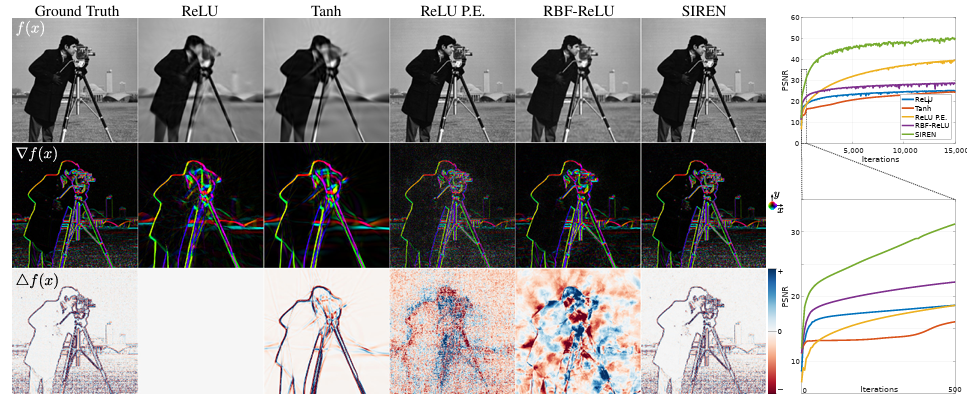

There were major difficulties before neural field, which was spectral bias problem. Neural networks inherently suffer from spectral bias—they preferentially learn low-frequency functions and struggle with high-frequency details. A standard multilayer perceptron (MLP) with ReLU or similar activations tends to produce overly smooth outputs, missing fine-grained textures and sharp edges critical for photorealistic rendering. The image below shows ReLU and similar activations produce piecewise-linear outputs with zero second derivatives, making it hard to model fine detail or higher-order signal derivatives. This tells us the limitation becomes problematic when representing 3D scenes, which contain high-frequency details like edges, textures, and fine geometric features.

So, there are workaroudns can be used are in practice, for example, adding fixed Fourier features or sinusoidal positional encodings on the inputs. SIREN takes a more direct approach: it uses periodic (sine) activations throughout the network to build high-frequency capacity natively.

Let’s talk about SIREN(Sinusoidal Representation Network) little bit. This simply replaces standard nonlinearities with sine function. Concretely, computing this in each layer: \[x_{\ell+1} = \sin(\mathbf{W}_\ell \mathbf{x}_\ell + \mathbf{b}_\ell)\]

Why periodicity helps (SIREN)?

so, every neuron is a periodic oscillator. Why do that? there are two advantages using this. (1) Rich Frequency Bias, each weight vector $\mathbf{w}$ acts as an angular frequency for that neuron and each bias as phase offset. a SIREN is deep superposition of sinusoids. Adjusting this $\mathbf{w}$, the network can generate signal at arbitrarily high frequencies. Larger magnitude weights induce higher-frequency components, while smaller weights yield lower frequencies. SIRENs embedded a broad Fourier basis internally, making them naturally suited to fit complex, oscillatory signals. This is in contrast to ReLU/tanh networks, whose nonlinearities inherently bias toward smooth, low-frequency functions. (2) Smooth Derivatives The sine function is smooth and infinitely differentiable. Its derivative is a cosine (a phase-shifted sine), and further derivatives remain sinusoidal. In fact, as the authors note, “any derivative of a SIREN is itself a SIREN,” because $\frac{d}{dx}\sin(x)=\cos(x)$. This means a SIREN can represent not just a signal but also its gradient and Laplacian accurately. For physics-based tasks (e.g. solving PDEs or learning fields from gradient samples), this is crucial: SIRENs can easily encode higher-order derivatives, whereas ReLU networks have piecewise-constant first derivatives and zero second derivatives. Why am I focusing on this SIREN because this is preliminary information for positional encoding in NeRF.

SIREN Initialization and Training Behavior

Just to give you the heads up, the author mentioned that the SIREN needs to be initialized carefully, By drawing weights from a scaled normal distribution (standard deviation $\sqrt{2/n}$) and choosing the first-layer frequency scale $w_0$ so that $\sin(w_0 x)$ spans many periods over the input range, the authors ensure each layer’s pre-activations stay near unit variance. Concretely, they find setting $w_0\approx30$ (so that $\sin(w_0 x)$ oscillates quickly over $x\in[-1,1]$) yields fast, robust convergence. This principled init prevents the network output from collapsing or exploding with depth, preserving gradient flow. With this setup, SIRENs train stably via standard optimizers like Adam.

With this setup, SIRENs exhibit stable gradient behavior. The sine derivative $\cos(x)$ is bounded and nonzero almost everywhere, avoiding the dead zones of ReLU or saturation of $\tanh$. Empirically, Sitzmann et al. show that SIRENs converge much faster than baseline ReLU/tanh networks. For example, fitting a single 2D image takes only a few hundred iterations (seconds on a GPU) with SIREN – orders of magnitude faster than naive MLPs – while reaching higher fidelity. This is because the network immediately has the capacity to represent needed frequencies, rather than slowly adapting to them during gradient descent. In fact, subsequent analysis (Chandravamsi et al.) confirms that without proper init, even SIRENs can exhibit a form of “spectral bottleneck” – underscoring the importance of the initialization scheme.

Volumetric Rendering

Some notes are from Volumetric Rendering Techniques GPU Gems and my post about Volume Rendering

To summarize to one point is that volme rendering itself is differential function. The goal in NeRF is to calculate the loss between GT and MLP output image.

NeRF: Neural Radiance Field

NeRF Key Points

- This paper proposes a method that synthesizes novel view of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views.

- This algorithm represents a scene using a fully-connected deep network, whose input is a single 5D Coordinates and whose output is the volume density and view-dependent RGB Color at that spatial location.

- Classical volume rendering techniques are used to accumulate those colors and densities into a 2D Images

NeRF Overview

Since we cover the major components above, let’s look into details of NeRF